Search engine crawlers

Definition

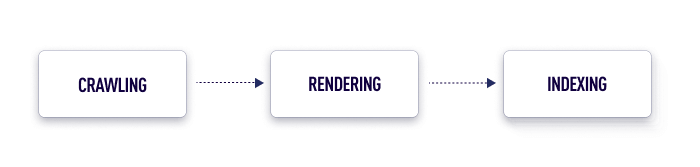

Search engine crawlers, also known as web crawlers or spiders, are programs that search engines use to discover and index new and updated webpages[1]. These crawlers visit websites and follow links on those websites to discover new pages, and they add these pages to the search engine's index.

How crawlers work

When a search engine crawler visits a webpage, it analyzes the content of the page and adds it to the search engine's index. The search engine can then use this index to quickly retrieve relevant pages in response to user searches.

Crawlers are an important part of how search engines work, as they allow search engines to discover and index new webpages, ensuring that the most up-to-date and relevant information is available to users.

Crawlability in SEO

Optimizing a website's crawlability is the process of making it easier for search engine crawlers to discover and index the pages on the website. This is an important aspect of search engine optimization (SEO), as it can help to improve the visibility of the website on search engine result pages (SERPs) and attract more organic traffic.

Improving crawlability

There are several tactics that can be used to optimize a website's crawlability for SEO:

- Use a sitemap: A sitemap is a file that lists all of the pages on a website and provides information about their hierarchy and the relationship between them. Submitting a sitemap to search engines can make it easier for crawlers to discover and index the pages on the website.

- Use descriptive and relevant URLs: Using descriptive and relevant URLs can help to make it easier for both users and crawlers to understand the content of a webpage.

- Use clear and organized navigation: Having a clear and organized navigation structure can help to make it easier for crawlers to discover and index the pages on the website.

- Use descriptive and relevant title tags and meta descriptions: Title tags and meta descriptions are elements that appear in the HTML of a webpage and provide information about its content. Using descriptive and relevant title tags and meta descriptions can help to improve the visibility of the website on SERPs and make it more attractive to users.

- Use header tags: Header tags are used to structure the content of a webpage and can help to make it easier for crawlers to understand the hierarchy and organization of the content.

- Use alt text for images: Alt text is a text description of an image that is added to help search crawlers better understand what’s on the image.

- Use internal linking: Internal linking is the process of linking to other pages on your own website from within your content. This can help to improve the crawlability of the website by allowing crawlers to discover and index new pages.

- Use responsive design: Using a responsive design ensures that the website is optimized for viewing on different devices and screen sizes[2]. This can help to improve the crawlability of the website by making it easier for crawlers to access and index the pages on the site.

- Use clean and valid HTML code: Using clean and valid HTML code can help to improve the crawlability of the website by making it easier for crawlers to understand and index the content of the pages.

- Avoid cloaking: Cloaking is the practice of presenting different content to users and search engines. This can be seen as deceptive and can result in the website being penalized by search engines. It is important to avoid cloaking in order to ensure that the website is crawled and indexed properly.

Related links

How Google Crawler Works: SEO Starter-Pack Guide

How to Manage Crawl Budget and Boost Your Presence in Search